Entropy is a key term in physics and it can also be applied to other fields such as chemistry, economics, and cosmology. It is an aspect of thermodynamics in physics. It is a fundamental idea in physical chemistry.

It is the extensive attribute of a thermodynamic system, which means that its value varies depending on the amount of matter present. Entropy is measured in joules per kelvin (JK1) or kgm2s2K1 and is typically symbolized by the letter S in equations. The number of alternative ways to organize and arrange a system, as well as its measurement, is known as entropy. Learn about entropy, including its definition and formula, how it increases, and essential words in its link to the second rule of thermodynamics.

What is entropy?

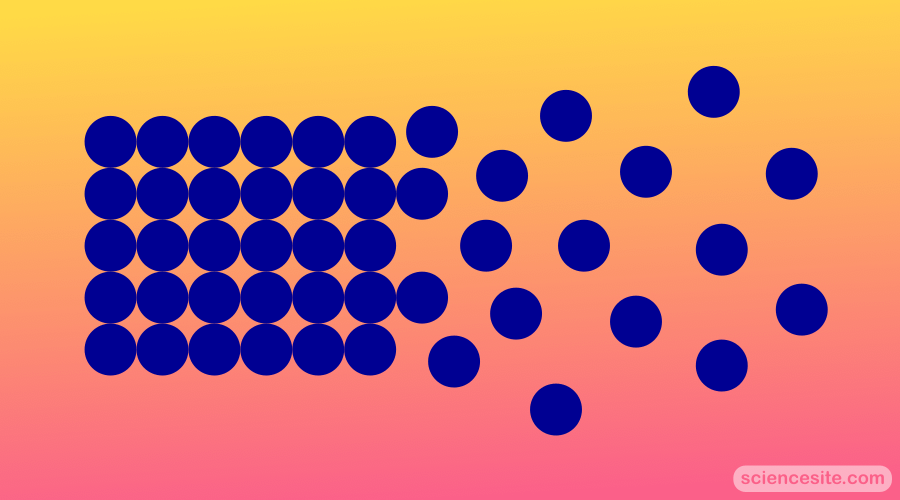

When it comes to entropy let us look at an example of balls. You will need to grab one ball from the bag and place it on the table. Can you tell me how many ways are there to arrange it? Just one. Now let us take two balls and do the same thing. If we answer the same question, this time we have two more ways to arrange the balls on the table. So if we keep doing this until each and every ball is on the table, we will have many countless ways to arrange them. Why did I use this example? Well, because this is what entropy looks like.

In this case, entropy is defined as the number of possible configurations for a system. The higher the entropy (the number of possible arrangements for a system), the more chaotic it is. Spraying perfume in the corner of a room is another illustration of this notion of entropy. We’re all aware of what comes next. The perfume will not remain in that room’s corner. The molecules of perfume will ultimately flood the space. By spreading over the room, the perfume moved from being well-ordered to be chaotic.

How does entropy increase?

The second law of thermodynamics states that in any operation involving a cycle, the system’s entropy will either remain constant or grow. The entropy will not change if the cyclic process is reversible. Entropy will increase when a process is irreversible.

The best approach to explaining a reversible process is to compare it to watching a film. The method is reversible if you can’t tell whether the movie is playing forwards or backward. If you can tell the video is playing backward, though, the process is irreversible. Frying an egg, for example, is irreversible, as is blowing up a skyscraper. You can discern forward from reverse if you produce a movie of these procedures.

Changing the state of something like melting a chunk of steel and vice versa is a good example of a reversible process. Because the alterations are only physical, they can be reversed. You won’t be able to differentiate forward from reverse if you make a movie of it. The majority of microscopic processes can be reversed.

What is a change in entropy?

Entropy Change can be described as a shift in a thermodynamic system’s state of disorder caused by the conversion of heat or enthalpy into work. Entropy is higher in a system with a high degree of disorderliness.

Entropy is a state function factor, which means that its value is independent of the thermodynamic process’s pathway and is solely a determinant of the system’s beginning and final states. The changes in entropy in chemical reactions are caused by the rearranging of atoms and molecules, which alters the system’s initial order. This can result in an increase or decrease in the system’s randomness, and thus in an increase or decrease in entropy.

Thus, the higher the entropy in an isolated system, the greater the disorderliness. The entropy shift in a chemical reaction can also be attributed to the rearranging of atoms or ions from one pattern to another. If the molecules in the products are highly disordered in comparison to the reactants, the reaction will result in an increase in entropy.

Thus, the disorderliness of the structures of the species participating in the reaction can be used to quantify the change in entropy accompanying a chemical process qualitatively. In comparison to other solids, the crystalline solid-state, for example, has reduced entropy.

Due to an increase in molecular movements, adding heat to a system increases its unpredictability of the system. A system with more unpredictability has a higher temperature than one with a lower temperature. As a result, the temperature has a role in determining the randomness of particles in a system.

When heat is applied to a system at a lower temperature, it creates more unpredictability than when heat is applied at a higher degree. As a result, the entropy change is inversely proportional to the system’s temperature.

How to write entropy equations and do calculations?

The two most frequent equations for reversible thermodynamic processes and isothermal, also known as constant temperature. These processes are for reversible thermodynamics and isothermal processes, respectively.

⦁ Reversible process entropy

When calculating the entropy of a reversible process, some assumptions are made. Probably the most crucial assumption is that each process configuration is equally likely (which it may not actually be). Entropy = Boltzmann’s constant (kB) multiplied by the natural logarithm of the number of possible states (W) given equal probability of outcomes:

S = kB ln W

⦁ Isothermal process entropy

Calculus can be used to calculate the integral of dQ/T from the starting to final states of a system, where Q is heat and T is the absolute (Kelvin) temperature.

The change in entropy (S) is equal to the change in heat (Q) divided by the actual temperature (T):

ΔS = ΔQ / T

⦁ Internal energy entropy

One of the most useful equations in physical chemistry and thermodynamics connects entropy to a system’s internal energy (U):

dU= T dS – p dV

The change in internal energy dU is equal to absolute temperature T multiplied by entropy minus external pressure p and volume change V.

Conclusion

Because negative entropy makes no sense, if entropy has been continually increasing, there must have been a time when it was zero. If this is true, the Big Bang theory is supported since the entropy value of zero can only be reached when the entire universe is a singularity.